Parallel scraping

Anyone who has done some Web scraping in languages like Python has likely encountered a common issue: blocking network requests.

Even if the scraper takes only a few milliseconds to process HTML, having to sequentially download 10 pages significantly increases execution time. To circumvent this problem, there are several alternatives. Let's explore some of them with an example.

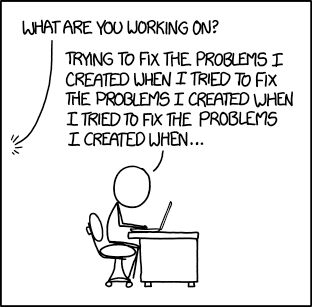

Suppose we want to download the first 1000 comics from XKCD. A simple script would be:

from requests import get

URL = "https://xkcd.com/%s/"

pages = []

for n in range(1, 1001):

print("Downloading page %s" % n)

pages.append(get(URL % n))

The threading Module

With this module, we can create multiple threads and make many requests simultaneously:

from requests import get

from threading import Thread

URL = "https://xkcd.com/%s/"

pages = []

def down(n):

print("Downloading page %s" % n)

pages.append(get(URL % n))

threads = [Thread(target = down, args = (n,)) for n in range(1, 1001)]

[t.start() for t in threads] # start all threads

[t.join() for t in threads] # block until all threads finish

This considerably speeds up the program, reducing the time from about 17 minutes on my computer to an acceptable 17 seconds. But the problem remains: if instead of 1000 pages there were 10000, would the processor be able to handle so many threads optimally? Would we hit a maximum number of threads?

Threading Module with Workers

An alternative is the creation of workers: a limited number of threads that don't download just one page, but keep downloading pages until all are obtained.

from requests import get

from threading import Thread

URL = "https://xkcd.com/%s/"

WORKERS = 20

pages = []

to_download = [URL % n for n in range(1, 1001)]

def worker():

while len(to_download):

url = to_download.pop()

print("Downloading page %s" % url)

pages.append(get(url))

workers = [Thread(target = worker) for _ in range(WORKERS)]

[w.start() for w in workers] # start all workers

[w.join() for w in workers] # block until all workers finish

On my computer, it takes about 21 seconds to complete the download, but the processor load is much lower in this case. However, with a large number of pages to download, it becomes quite slow. But we still have one more option.

The grequests Module

This module has the same interface as requests, with the difference that you have to put a G in front when importing it. Its installation via pip is very simple, and it allows making requests asynchronously using the Gevent library. When making a request for several pages, grequests creates and manages the coroutines to download them.

from grequests import get, map

URL = "https://xkcd.com/%s/"

reqs = [get(URL % n) for n in range(1, 1001)]

print("Downloading all pages")

print(map(reqs))

This code is much simpler and more intuitive than the previous ones. Plus, it downloads the 1000 pages in just about 15 seconds without overloading the processor at all.

There are other alternatives that I haven't explored yet, such as requests-threads and requests-futures. If you know more about this topic, feel free to leave a comment!

https://gist.github.com/nbcsteveb/b1819cf72e62883efc0dd5e6139ac5d3

Replying to Steve:

That's actually a great suggestion. With this approach the multithreaded is not a worker but the actual task at hand, which is more readable even if it works in the same manner. Thanks!