After so long, I still find basic stuff in Python that I didn't know about. Here are some of my most recent TILs.

You can use underscores to separate digits in a number and they'll be ignored by the interpreter:

>>> 1000000

1000000

>>> 1e6

1000000.0

>>> 1_000_000

1000000

The sum method accepts a second parameter that is the initial value of the sum:

>>> base = 20

>>> sum([1, 2, 3], base)

26

With f-strings you can use the = modifier for including the code of the variable interpolation:

>>> a = 1.6

>>> print(f'We have {a=} and {int(a)=}.')

>>> We have a=1.6 and int(a)=1.

You can use classes to define decorators instead of functions:

>>> class show_time:

... def __init__(self, func):

... self.func = func

... def __call__(self, *args, **kwargs):

... start = time()

... result = self.func(*args, **kwargs)

... print(f'It took {time() - start:.4f}s')

... return result

...

>>> @show_time

... def test():

... return sum(n**2 for n in range(1000000))

...

>>> test()

It took 0.1054s

333332833333500000

The mode attribute for open is 'r' by default:

>>> with open('test.txt') as f:

... print(f.read())

>>> It works!

5 comments

Developers spend at least one third of their time working on the so-called technical debt [1,2,3]. It would make sense then that non-technical people working with developers had a good understanding of an activity that takes so much of their time. However, this abstract concept remains elusive for many.

I believe this problem has to do with the name "technical debt" itself. Many people are unfamiliar with debt, and thinking of how debt applies to software is not straighforward. But there is a concept that is way more familiar and might be more familiar: clutter. In software, as in any other aspect of life, clutter follows three basic rules:

- It increases with normal operation.

- Accumulating it leads to inneficiencies.

- Managing it in batches is time efficient.

Think of how it applies to house clutter: (1) everyday life tends to get your house messier if nothing is done to prevent it, (2) a messy home makes your day to day slower, and (3) the total time required to clean twenty dishes once is less than cleaning one dish twenty times. I empirically measured myself in this endeavor; it took me 5:10 to clean twenty dishes, versus the 15:31 that I spent cleaning them one by one over the course of multiple days.

The things I do for science

Due to the third rule, keeping the clutter at zero is a suboptimal strategy. I guess this is why in any given workplace we find clutter to some extent; think of office desks, factory workshops, computer files, email inboxes… and codebases. Just as it's inefficient to clean a single dish immediately after every use, in software development, addressing every instance of technical debt or "code clutter" as soon as it arises is not always practical or beneficial.

However, due to the second rule, letting the clutter grow indefinitely is also not a good strategy, because the inneficiencies created by the clutter can be bigger than the time required to keep it at bay. One of the hardest tasks in management is striking a good balance between both, because it's all about solving this optimization problem.

To find the optimum amount of clutter, you'd need to know how much does clutter grow depending on which operation you do, and how much easier it is to reduce clutter when you have more of it. But none of those two parameters is easily readable when looking at a project, so the way to steer a project is usually based in prior trial-and-error. Plus, those parameters are not static over time, which just makes it even worse.

I have seen many practices targeted at keeping technical clutter at bay, such as dedicating a day each week or allocating a percentage of the sprint backlog for refactoring. But I haven't found any foolproof data-based method for managing it, maybe because the distiction between adding new functionalities and de-cluttering is not always clear-cut and that makes it hard to report on.

What is clear is that, managing clutter requires a shift in the way we see it: it's not an accidental problem introduced by inneficient development, but rather an unavoidable byproduct of development.

No comments

Sometimes I have a conversation with an AI that looks particularly interesting and I share it with my friends or colleagues. I thought I could start a new post format, using the typical interview structure, to share it with the rest of the world. This is the first of those. Some replies are shortened (usually by sending "tl;dr" to the AI).

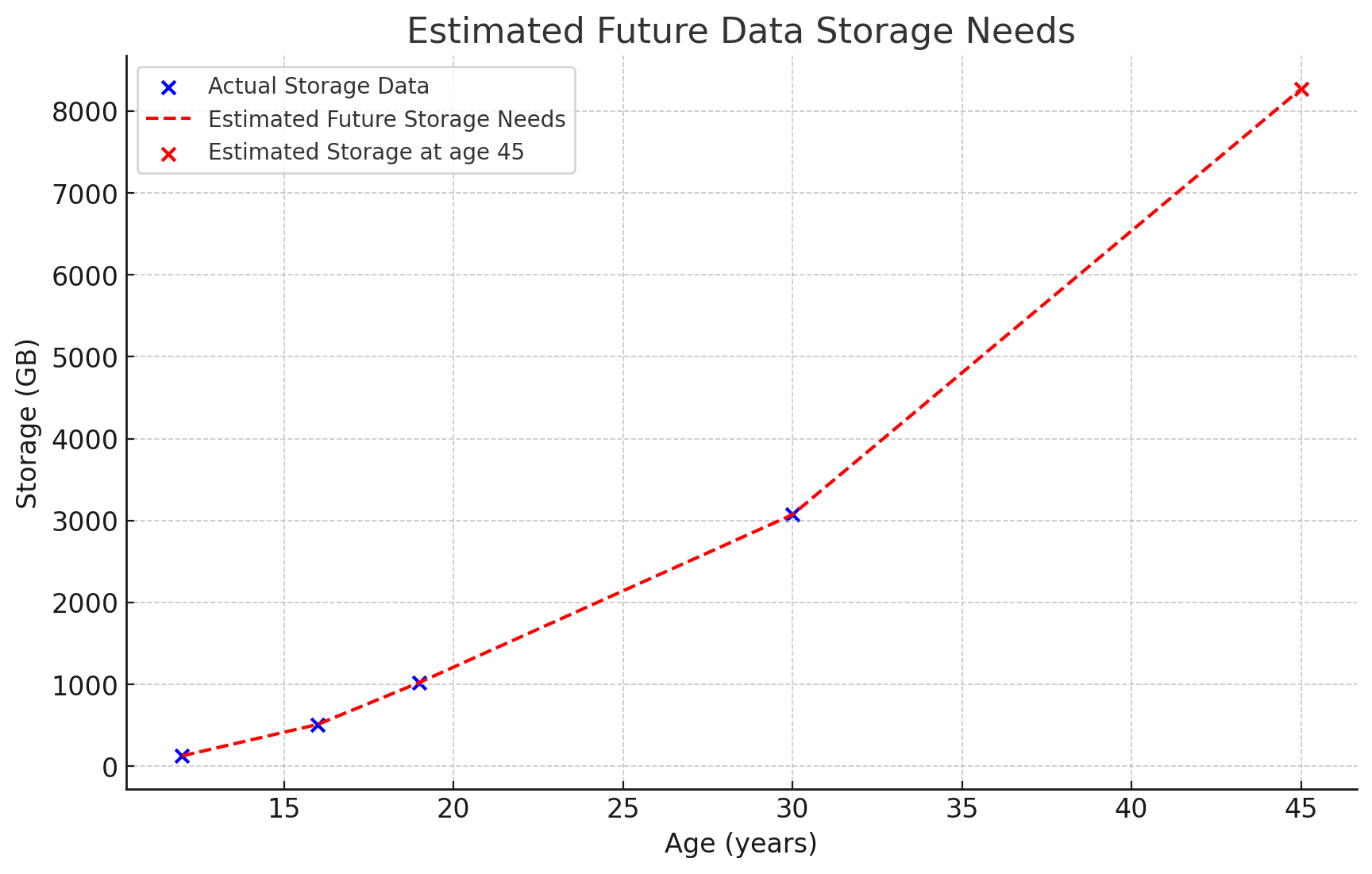

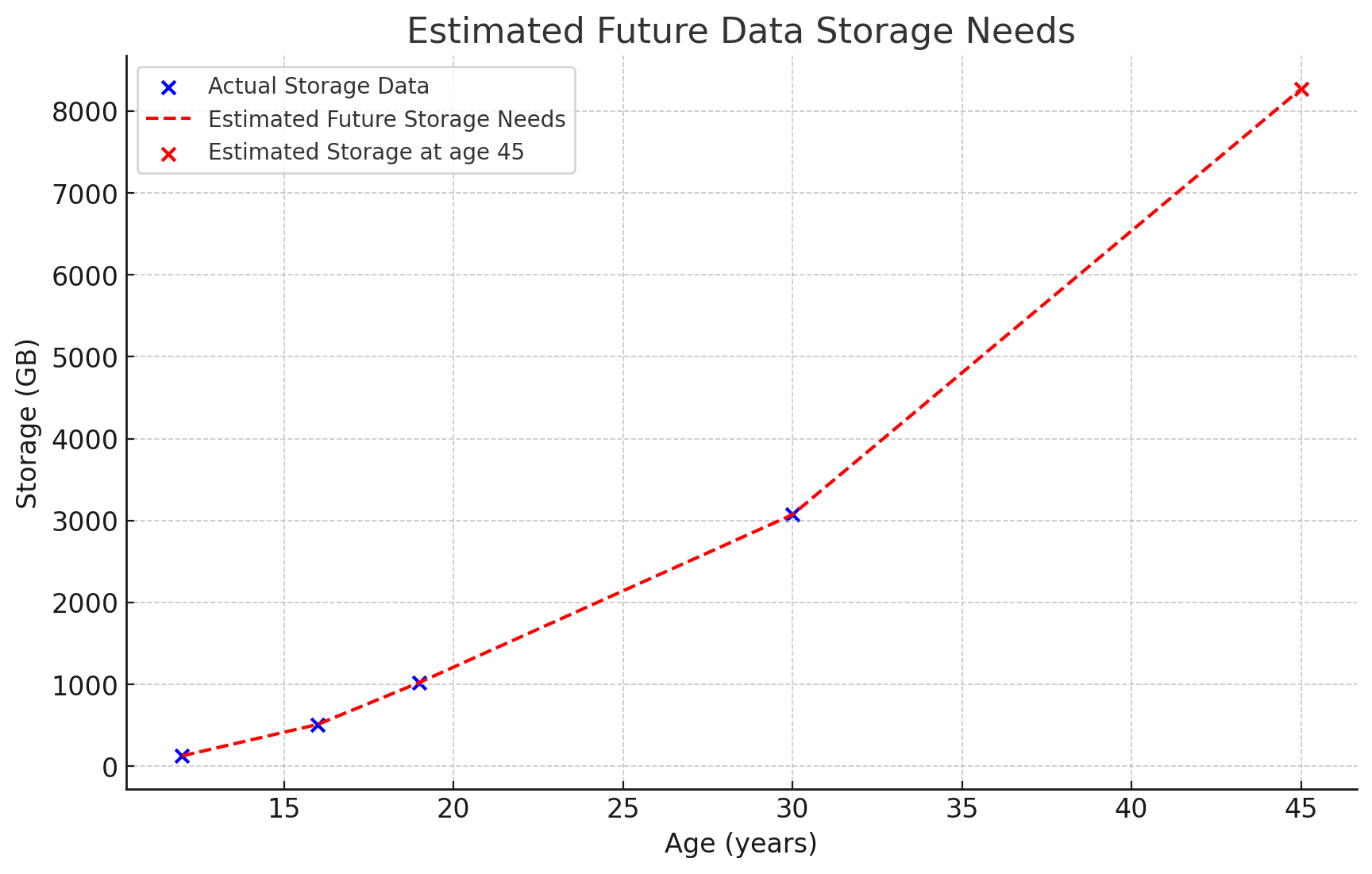

Q: I am running out of space in my computer and phone, and many of my acquaintances pay services like Google One or iCloud. I want to compare alternatives, but first, let's estimate my future storage needs. When I was 12 I had 128GB of storage, by 16 I had 512GB, by 19 I had 1TB. Now I'm 30 and I have 3TB. Plot this in a chart and show me what will be my data needs by the time I'm 45.

A: The projected storage needs by the time you are 45 are around 8.27TB. Here is an exponential plot:

Q: So, let's say I am looking for an storage solution for me, my partner, and possible newcomers. Since I'm a computer scientist, my data needs are greater than the average person. Let's say my partner needs half of what I do, and the new residents of our home will also needs half of what I do in total. What's the total by that time?

A: By the time you are 45, the estimated total storage requirement for your household would be approximately 16.54TB.

Q: Okay, so let's say 20TB to avoid falling short. Is 15 years a realistic measure of time or should I target a shorter/longer period? What alternatives do I have?

A: A 15-year timeframe might be overly optimistic given the pace of technological advancements and increased storage needs, but it is still reasonable. Here are the possible solutions:

- Cloud storage: services like Google One or iCloud for scalable and remote storage options.

- Network-Attached Storage (NAS): a flexible, upgradeable solution that can serve multiple users and functions within a household.

- Direct Attached Storage (DAS): an easy and cost-effective way to expand storage directly attached to your devices.

- Hybrid Solutions: Combine local storage options (NAS/DAS) with cloud storage for a balance of accessibility, security, and redundancy.

Q: I want to find the most cost-effective solution that is reliable to not lose data, allow for individual read/write permissions, and not be too dependent on companies swifting policies (I don't want to have to sign in to Synology NAS or to have Apple double the storage cost suddenly).

A: For a cost-effective and reliable solution with individual read/write permissions that minimizes dependency on company policies:

- Build Your Own NAS: Using open-source software like FreeNAS or OpenMediaVault, you can create a customized NAS without being tied to a specific vendor's ecosystem.

- Use Independent Cloud Storage: Choose a cloud provider with a strong track record of stable pricing and policies, and consider using encryption for added security and privacy.

Q: For reference, I'll paste you here the pricing of a few alternative source storages and you make a table with the 15-year cost. All of them allow at least 12TB storage and at least 4 users, so don't worry about those. One USD is 0.92 EUR, and I want all answers in €. Assume 4 users for the calculations and 50% downloaded per month. [pricings redacted for conciseness]

A: Here's the calculations you mentioned, based on the information provided without accounting for potential changes in rates or special offers over time:

| Service | 15-Year Cost (€) |

|---|

| Dropbox Advanced | 9,600 |

| iCloud | 10,800 |

| Backblaze | 11,923 |

| Wasabi | 25,155 |

| Box Enterprise | 25,515 |

| AWS Disks | 45,360 |

Q: Alright, so now let's do the same estimation with NAS. I want my solution to be reliable, so what RAID options do I have?

A: The following RAID levels have some degree of redundancy:

- RAID 1 (Mirroring): Provides redundancy by duplicating the same data on two or more disks. It's simple and offers excellent fault tolerance, as data can be recovered as long as one drive is operational.

- RAID 5 (Striping with Parity): This level offers a balance of good performance, good fault tolerance, and efficient storage utilization by distributing parity information across all drives. It can survive the failure of one drive.

- RAID 6 (Striping with Double Parity): Similar to RAID 5 but adds an extra layer of fault tolerance by using two sets of parity data. It can survive the failure of two drives, making it more reliable than RAID 5 at the expense of additional storage used for parity.

- RAID 10 (1+0): This naming convention reflects the combination of RAID 1 (mirroring) and RAID 0 (striping). It provides the benefits of both levels: improved performance from striping and redundancy from mirroring. It is very reliable and offers excellent performance but requires at least four drives and results in a 50% loss of storage capacity to redundancy.

Q: Ideally I want not to sacrifice 50% of my storage, and be able to recover automatically from bit rot and from the failure of one drive.

A: Given your requirements—minimizing storage sacrifice, automatic recovery from bit rot, and surviving the failure of one drive—RAID 5 seems to be the most suitable option for your needs.

Q: What disks would I need for a 20TB RAID 5? Do they have to have the same size? Give me the size required for 3-6 drives

A: In a RAID 5 setup for 20TB usable space, all disks should ideally be the same size to optimize storage use and performance. Here's the minimum size required per disk for different total drive counts:

- 3 drives: Each needs to be at least 10TB.

- 4 drives: Each needs to be at least 6.7TB.

- 5 drives: Each needs to be at least 5TB.

- 6 drives: Each needs to be at least 4TB.

Q: I did a bit of research and found NAS enclosures that are not company-dependent for ~200€ for 4 disks and ~270€ for 6 disks. Then I found 10TB drives for 300€, 8TB drives for 220€, 6TB drives for 200€. Compute my options in price. (yes, I'm too lazy to calculate it)

A: Given your price estimations, these are your alternatives with their price:

| Drives | Size (TB) | Cost (€) | Storage |

|---|

| 3 | 10 | 1100 | 20 |

| 4 | 8 | 1000 | 24 |

| 5 | 6 | 1470 | 24 |

Q: Alright, so looking at this pricing, it seems that 3-4 drives makes more sense for me. I'd rather go with 3, as it lets me expand the NAS in the future. What do you need to know to include also the electricity bill?

A: To calculate the NAS electricity consumption cost over 15 years, I need to know how many watts (W) are consumed by the NAS, how many hours a day it will be working, and what is the price per kilowatt-hour (kWh) where you are located.

Q: The max energy consumption is 36.5W, my electricity is 0.10689€/kWh, and I want it to run 24/7. Let's assume this price won't change (I hope it scales with my purchasing power).

A: Then the estimated cost to operate the NAS over 15 years, adding a constant consumption, is approximately 513.21€. Note that this computation assumes that the energy consumption is always maximum, which might be higher than the real number.

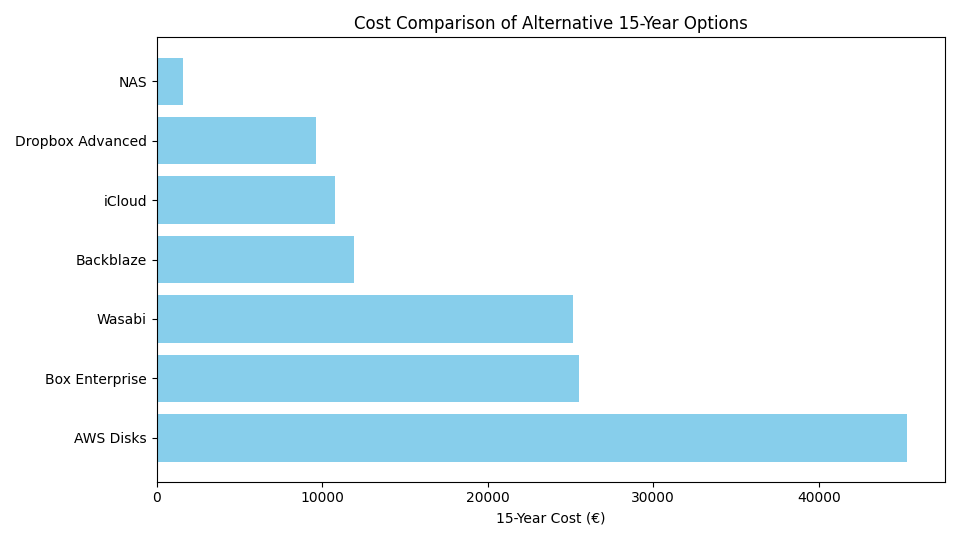

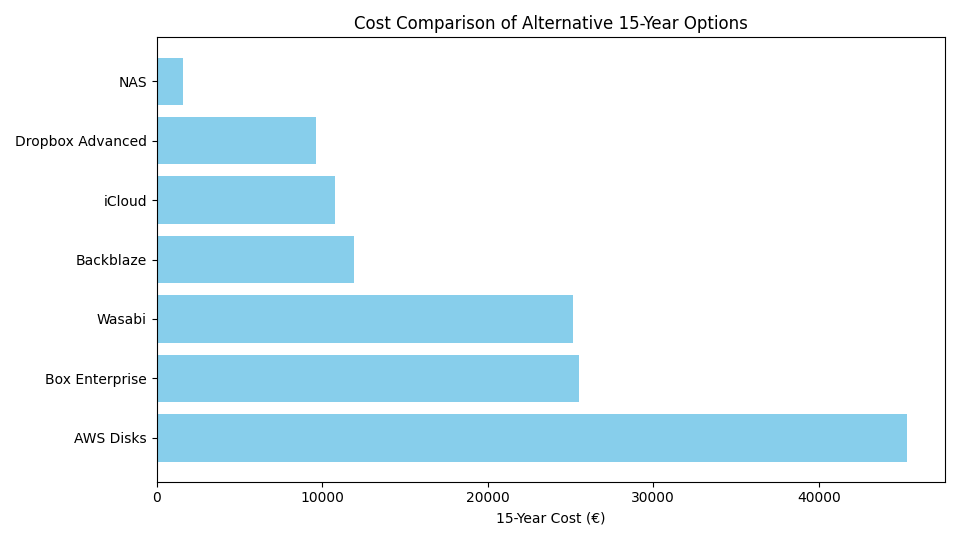

Q: So finally, update my alternatives table including a row with the cost of running my own NAS. Add a column for a % of difference. Give your conclusion and plot it.

A: Here is the final table. Based on this difference, running your own NAS seems the most viable and cost-efficient alternative.

| Alternative | 15-Year Cost (€) | Difference to NAS |

|---|

| NAS | 1,600 | Reference |

| Dropbox Advanced | 9,600 | 500% |

| iCloud | 10,800 | 575% |

| Backblaze | 11,923 | 645% |

| Wasabi | 25,155 | 1,472% |

| Box Enterprise | 25,515 | 1,495% |

| AWS Disks | 45,360 | 2,735% |

And that's it. Probably there are many factors that are not considered in this comparison. For instance, I don't need the 20TB right now so I could start with cheaper storage solutions and scale afterward. Also, the cost of fixing possible problems with storage shouldn't be ignored. But the cost of fighting against iCloud to download my pictures to a disk is also higher than I expected. I also want to learn more about what options do I have to see my data besides a file explorer, as I'm sure that the integration won't be as seamless as iCloud syncing with my Photos app.

What do you think, should I have a NAS?

1 comment

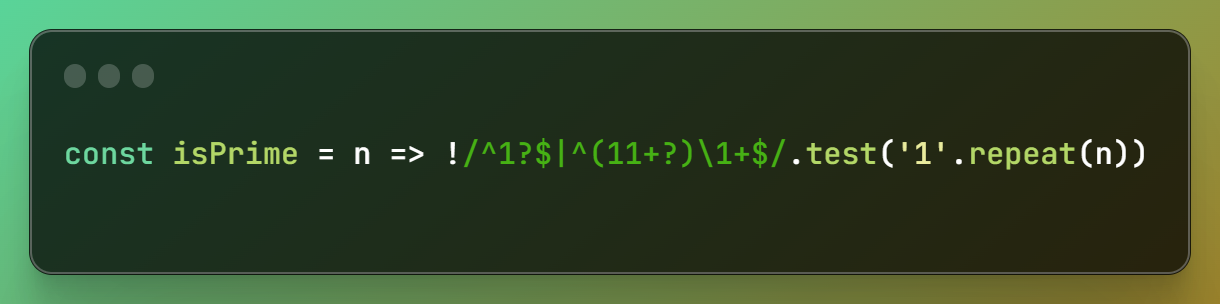

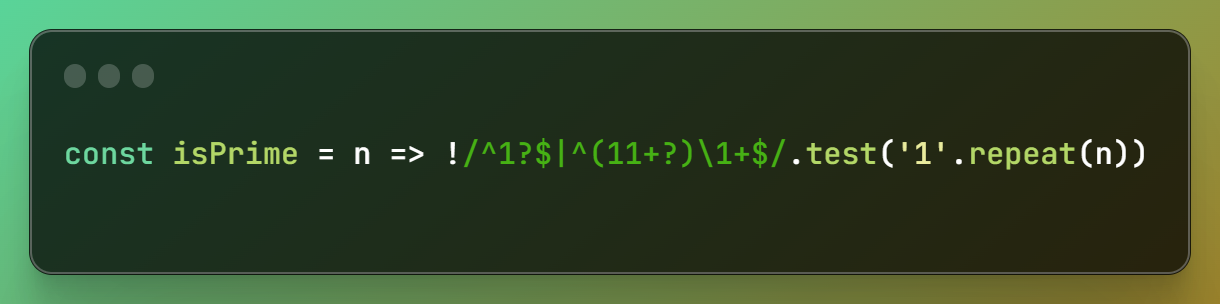

The suggestions coming from Github Copilot look sometimes like alien technology, particularly when some incomprehensible code actually works. Recently, I stumbled upon this little excerpt that tests if a number is prime (and it actually works):

The ways of the universe are mysterious

Let's dissect the expression to understand it a bit better. First, the number that we are trying to check is converted into a sequence of that same amount of ones with '1'.repeat(n). Hence, the number 6 becomes 111111. We can already see why this is a fun trivia and not something you should be using in your code (imagine testing for 1e20), and why should always inspect the code from Copilot.

This list of ones is tested against the regex, so that if there is some match, the number is not prime. If you're not very used to regular expressions, I suggest learning it with some resource like RegexOne or Regex Golf; it's one of those tools that come in handy regardless of the technology you use, either to test strings or to find and replace stuff quickly. It's combines really well with the multiple cursors from modern IDEs.

The regex /^1?$|^(11+?)\1+$/ will then only match non-prime numbers, so let's inspect it. First, it can be split into two expressions separated by a disjunction operator |. The first is ^1?$, which will match zero or one, the first two non-prime natural numbers. Then, ^(11+?)\1+$, which is where the magic occurs. The first part (11+?) will match a sequence of two or more ones, but in a non-greedy way, so that it will match the smallest possible sequence. The second part \1+ will then match the same sequence repeated one or more times.

Since the whole expression is anchored to the beginning and the end of the string using ^ and $, it will only match strings made of some sequence that is repeated a number of times. And how can a sequence be a repeated a number of times? Well, not being a prime number. For instance, in the case of 6, the sequence 11 is repeated three times, so it matches the expression, because 6 is the product of 2*3.

^1?$

|

^

(11+?)

\1+

$

The original trick was developed in 1998 by @Abigail, a hacker very involved in the development of Perl, who keeps writing wild regex solutions to problems such as such as completing a sudoku or solving the N-Queens problem to this day. This expression is resurrected every few years, puzzling new generations of programmers. The next time you see one of these AI weird suggestions, if you pause to inspect it and do a bit of code archeology, you might find another piece of programming history.

Related posts:

No comments

After many years of making backends for one or another project, I find myself I keep frequently writing the same boilerplate code. Even if I tend to reuse my templates, the code ends up diverging enough to make switching between projects take some headspace. In an attempt to solve this, I created oink.php, a single-file PHP framework focused on speed and simplicity when building JSON APIs and web services.

function comment_create() {

$post_id = id("post_id");

$author = email("author");

$text = str("text", min: 5, max: 100);

check(DB\post_exists($post_id), "postNotFound");

return ["id" => DB\create_comment($post_id, $author, $text)];

}

That simple function is enough to create an endpoint with route /comment/create that takes three parameters post_id, author and text, validates them, and returns a JSON with the id of the new post. And to run it, you just need to add the oink.php file to your root folder, and point it to the file that defines your endpoints.

This library borrows some ideas I've been using in my personal projects for a while to speed up development. First, the routing is made by mapping API paths to function names, so I skip the step of creating and maintaining a route table. Also, all endpoints are method-agnostic, so it doesn't matter if they are called using GET, POST, DELETE or any other method; the mapping will be correct.

I also merge POST params, JSON data, files, cookies and even headers into a single key-value object that I access through the validation functions. For example, calling str("text", min: 5, max: 100) will look in the request for a "text" parameter, and validate that it is a string between 5 and 100 characters, or send a 400 error otherwise.

These tricks are highly non-standard and create some limitations, but none of them is unsolvable. This attempt of placing dev speed before everything else, including best practices, is what made me think of Oink as a good name for it. The library should feel like a pig in the mud: simple and comfortable, even though it's not the cleanest thing in the world.

Most of Oink's code comes from battle-tested templates I have been using for my personal projects. This blog's server, which also hosts several other applications, manages around 2000 requests per hour. Despite DDOS attempts or sudden increases in traffic, the server's CPU and memory usage rarely exceeds 5%, thanks to the good ol' LAMP stack. While my professional projects often utilize Python, the scalability and maintainability of running multiple PHP projects on a single Apache server showcases the stack's efficiency. It's evident why PHP still ranks the most used backend language in most reports.

To explore oink.php further or contribute to its development, visit the GitHub repository. While I recommend frameworks like Laravel or Symfony for larger enterprise projects needing scalability, Oink offers a compelling alternative for developers prioritizing speed and simplicity.

No comments